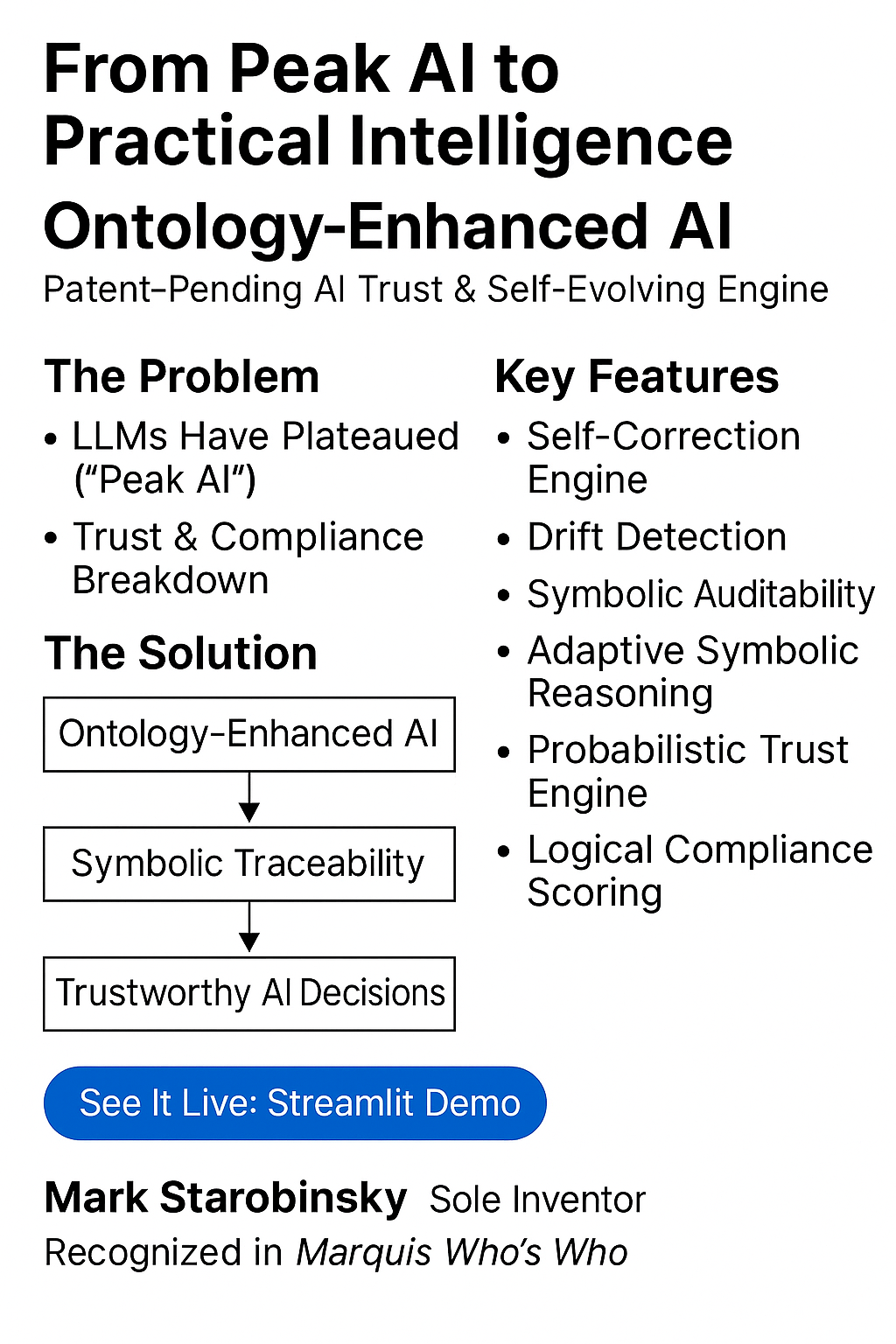

ONTOGUARD AI

Ontology AI Decision Authorization Layer

Ontology AI authorization for high-stakes workflows.

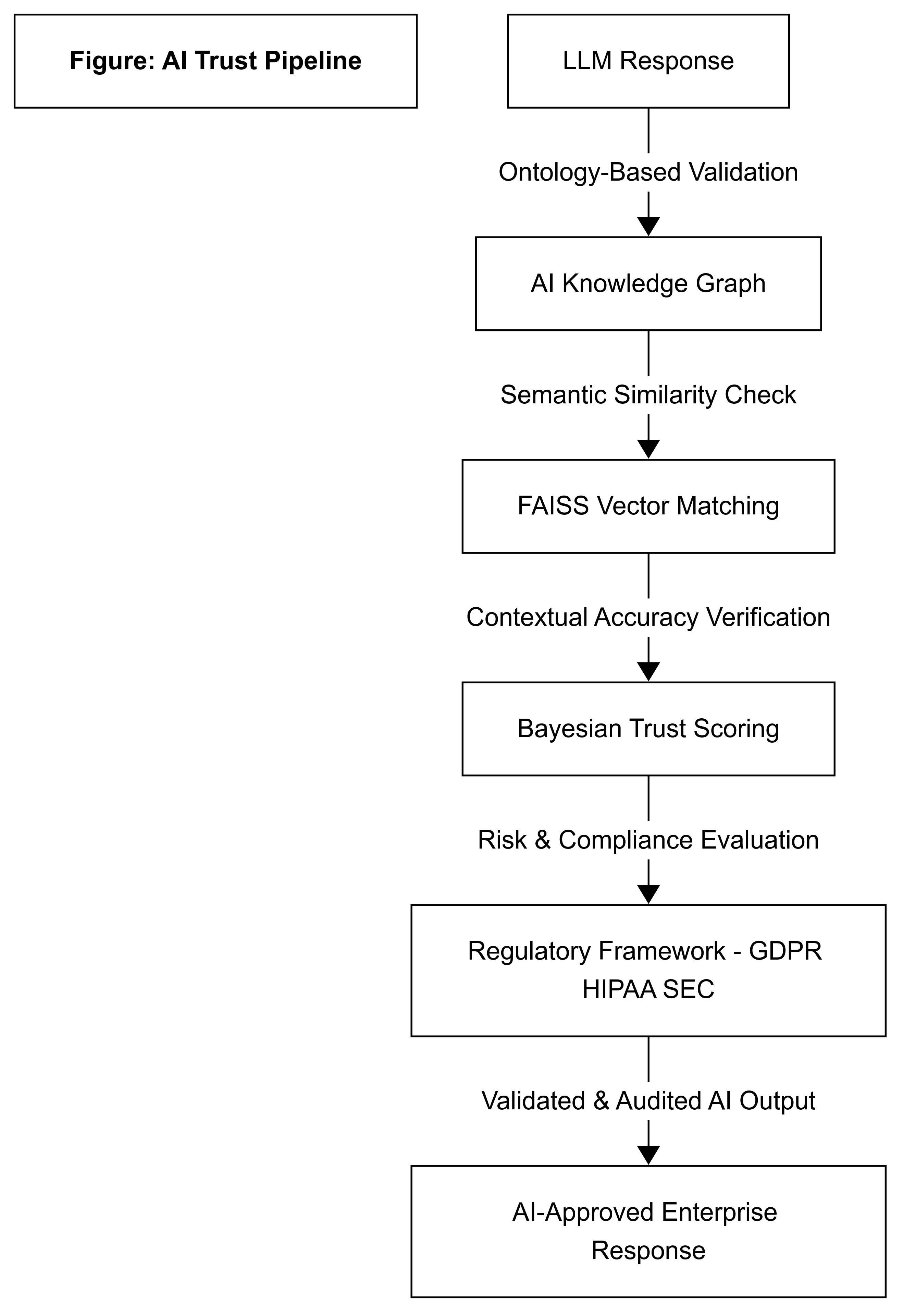

OntoGuard sits between your LLM model and the real world — deciding whether AI output can become action, with proof.

Works with any LLM and any data source. No costly retraining — policies and requirements can change without reworking your model.

What you get each step: ALLOW / BLOCK / ESCALATE + reasons + a replayable proof pack (Governance Report: JSON+PDF, evidence/provenance, policy snapshot + hashes, and decision trail).